02:19:19.903386: W tensorflow/compiler/xla/stream_executor/platform/default/dso_:64] Could not load dynamic library 'libnvinfer_plugin.so.7' dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory ], requires_grad=True)02:19:19.903296: W tensorflow/compiler/xla/stream_executor/platform/default/dso_:64] Could not load dynamic library 'libnvinfer.so.7' dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory Linear(in_features=3, out_features=2, bias=True) (0): Linear(in_features=3, out_features=2, bias=True) Tensor(], grad_fn=)ģ Inputs, 2 outputs and Activation Function Linear(in_features=2, out_features=3, bias=True)

(0): Linear(in_features=2, out_features=3, bias=True) Tensor(, requires_grad=True)Ģ Inputs, 3 outputs and Activation Function Linear(in_features=2, out_features=2, bias=True) (0): Linear(in_features=2, out_features=2, bias=True) in the following illustration indicates the Sigmoid activation function. The above illustration can be converted to a little bit different form that is used more often in neural network documents. Print('Sigmoid(w x + b) :\n',torch.nn.Sigmoid().forward(o))įor practice, let's try with another examples of input vector.Ģ Inputs, 2 outputs and Activation Function

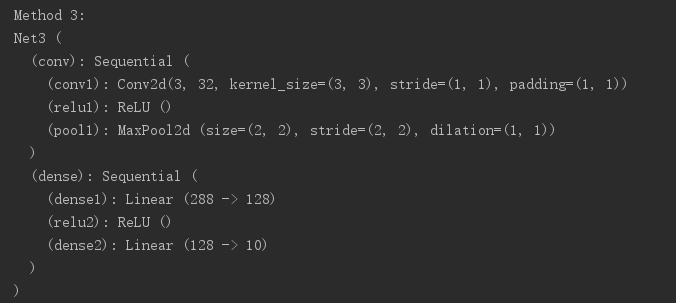

O = torch.mm(net.weight,x.t()) + net.bias One is to verify the result of forward() function and clarify your understanding on how the network forward processing works. You can evaluate the network manually as shown below. Print('net.forward(x) :\n',net.forward(x)) You can evaluate the whole network using forward() function as shown below. Print('Activation function of network :\n',net) You can get access to the second component as follows. Linear(in_features=2, out_features=1, bias=True) => Network Structure of the first component : Print('Weight of network :\n',net.weight) Print('Network Structure of the first component :\n',net) You can get access to each of the component in the sequence using array index as shown below. (0): Linear(in_features=2, out_features=1, bias=True) You can print out overal network structure and Weight & Bias that was automatically set as follows. It can be converted to a little bit different form that is used more often in neural network documents.

#Torch nn sequential get layers code#

The above illustration would be easier to map between Pytorch code and network structure, but it may look a little bit different from what you normally see in the textbook or other documents.

To use nn.Sequential module, you have to import torch as below.Ģ Inputs, 1 outputs and Activation Function NOTE : The Pytorch version that I am using for this tutorial is as follows.

0 kommentar(er)

0 kommentar(er)